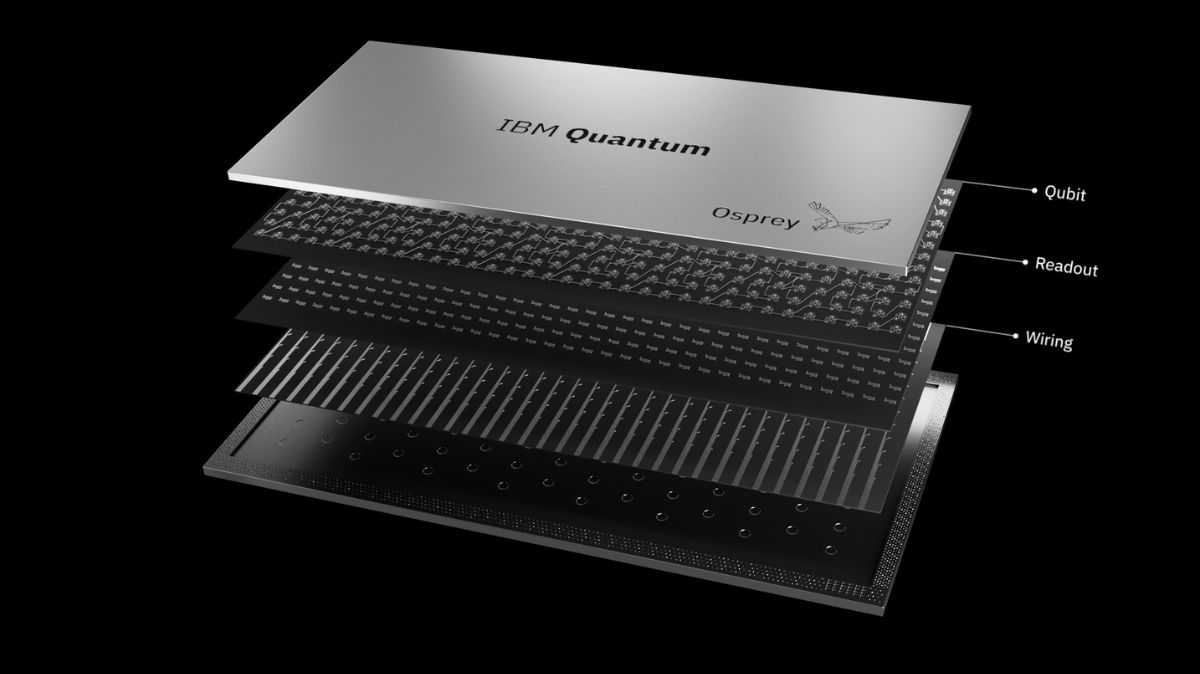

IBM Quantum CPU

IBM has once again advanced the field of quantum computing with a number of significant developments in software frameworks, quantum processors, and worldwide deployments that aim to achieve fault-tolerant quantum computing by 2029 and quantum advantage by 2026. The decade-long global battle to create scalable, dependable quantum machines that can solve problems well beyond the capabilities of classical computers has accelerated with these advancements.

From NISQ to Quantum Advantage

For a long time, quantum computing has the potential to drastically change industries like medicine development, materials research, and encryption. However, achieving these advantages depends on resolving enduring issues, primarily high error rates, a shortage of qubits, and decoherence, in which delicate quantum states deteriorate too rapidly to be of use in intricate computations.

These issues have been addressed by IBM’s quantum initiative in a number of ways, including hardware engineering, error prevention, and joint deployments with commercial, governmental, and academic partners. At its Quantum Developer Conference in November 2025, the corporation showcased a range of new processors and algorithms, demonstrating tangible advancements towards useful quantum systems.

You can also read Quantum Anticode: A New Concept In Quantum Error Correction

The Nighthawk and Loon Processors: A Leap in Connectivity and Performance

The IBM Quantum Nighthawk processor, a next-generation superconducting device that incorporates roughly 120 qubits with improved connection between qubits using 218 adjustable couplers, is at the heart of IBM’s most recent announcements. A crucial criterion for evaluating realistic quantum workloads, this design enables researchers to do quantum computations requiring up to 5,000 two-qubit gates, allowing circuits of much higher complexity than previous processors.

This enhancement signifies a roughly 20–30% rise in circuit complexity in comparison to previous IBM processors and serves as a crucial first step towards circuits that can execute tens of thousands of gate operations, a scale required to prove true quantum advantage. Deeper investigations of quantum algorithms will be made possible by Nighthawk’s anticipated future generations, which might support up to 7,500 gates by the end of 2026 and possibly up to 15,000 gates by 2028.

IBM’s experimental Loon design, in addition to Nighthawk, shows creative ways to connect qubits over larger devices, setting the stage for machines in the future that go beyond the nearest-neighbor coupling approaches used currently. One of the main challenges on the way to fault tolerance is scaling quantum processors without correspondingly raising mistake rates, which is made possible by these architectural improvements.

Quantum Advantage and Fault Tolerance: Milestones on the 2026–2029 Horizon

IBM has reaffirmed its goal of reaching quantum advantage by the end of 2026, which is the point at which quantum computers outperform classical machines on practical issues. Beyond this, its roadmap predicts fault-tolerant quantum computing by 2029, when logical qubits and error correction work together to enable genuinely dependable, scalable performance for intricate industrial and scientific applications.

In contrast to existing “noisy intermediate-scale quantum” (NISQ) systems, fault-tolerant machines would enable lengthy and dependable computation sequences by suppressing mistakes using coded logical qubits made from several physical qubits. IBM’s plan calls for the multi-stage development of processors and systems such as Starling, which is anticipated to have hundreds to thousands of logical qubits, and Blue Jay, which is subsequently envisioned as a large-scale, fault-tolerant machine with millions of physical qubits.

You can also read Fermilab Quantum Computing Research Drives 2025 Innovation

Commercial Viability: Partnerships and Real-World Uses

IBM is working to advance quantum computing beyond lab benchmarks. When HSBC Bank used IBM’s Heron quantum processors for trading algorithm simulations in late 2025, they reported a proven performance boost of about 34%. This is a significant indication that quantum machines are starting to provide value in actual business applications.

In a further step towards commercialization, IBM was chosen for Stage B of DARPA’s Quantum Benchmarking Initiative, which aims to thoroughly assess the viability of fault-tolerant quantum computers at the industrial scale. This partnership demonstrates how government and defence organizations are strategically interested in quantum technologies as essential parts of the infrastructure of the future.

Global Deployment and Collaborative Ecosystems

IBM’s goals for quantum technology go far beyond North America. Europe’s first IBM Quantum System Two, powered by a 156-qubit Heron processor, will be housed at the IBM-Euskadi Quantum Computational Centre in San Sebastián, Spain, extending the continent’s quantum ecosystem and research capacities.

In a similar vein, there are plans to build a significant quantum computing facility in Andhra Pradesh’s Quantum Valley Tech Park, which will house the nation’s largest quantum processor to date. With the help of IBM and partners like Tata Consultancy Services (TCS), new opportunities are being created in the quickly changing Asian technology landscape by assisting with the hardware installation as well as the development of local quantum talent and applications.

The Fugaku supercomputer at the RIKEN Centre for Computational Science in Japan will be integrated with IBM’s quantum systems, a noteworthy attempt to develop hybrid computing platforms where quantum processors and classical supercomputers operate side by side, accelerating groundbreaking research in fields ranging from complex systems modelling to material science.

You can also read Nonlinear Kalman Varieties: A Matrix Theory Revolution

Software, Algorithms, and Broader Quantum Ecosystem

In the absence of strong software and computational frameworks, hardware innovations alone are inadequate. As a key component of the quantum computing stack, IBM’s Qiskit software keeps developing, allowing developers all around the world to create, model, and execute quantum circuits on IBM’s cloud-accessible systems. Under Qiskit’s open ecosystem, cooperation with academic and business partners guarantees that hardware advancements are immediately translated into tools that are useful for both commercial developers and researchers.

IBM is also involved in community-led projects like a quantum advantage tracker that compares quantum performance against classical simulation to verify quantum utility across problem classes.

Strategic Impact and Future Outlook

IBM’s quantum strategy aims to make quantum computing essential to industrial computing and science. The company’s strategy comprises error-correction technologies, hybrid classical-quantum workflows, raw hardware performance, and global system deployment to bring quantum computing to real-world applications.

Although rivals receive media attention for experimental demonstrations and alternative qubit technologies, IBM’s comprehensive strategy emphasises integration, scalability, and commercial applicability. The company is at the core of the fast growing quantum ecosystem due to its open-source software, public benchmarks, and partnerships.

Developing algorithms that outperform classical approaches in real settings, expanding qubit counts with low error rates, and building dependable quantum-classical hybrid workflows are challenges. IBM’s recent quantum processor advances are a big step towards the long-awaited age of quantum computing, including industry deployments, commercial use cases, and strong collaborations.

You can also read Can Shor’s Algorithm Quantum Break RSA-2048? Reality Check

Thank you for your Interest in Quantum Computer. Please Reply